Relion

Short guide to using Relion (compiled with GNU tools)

Connect to the server gui.hpc.unipr.it using:

ssh -X nome.cognome@gui.hpc.unipr.it (with linux)

or

mobaXterm o Remote Desktop (with windows)

Open a terminal and enter the following commands:

[user@ui03 ~]$ newgrp <GROUP> newgrp: group '<GROUP>' (<GROUP>)[user@ui03 ~]$ cd $GROUP (<GROUP>)[user@ui03 <GROUP>]$ cd $USER (<GROUP>)[user@ui03 <USER>]$ mkdir -p relion/test (<GROUP>)[user@ui03 <USER>]$ cd relion/test (<GROUP>)[user@ui03 test]$ module load gnu8 openmpi3 relion (<GROUP>)[user@ui03 test]$ module list Currently Loaded Modules: 1) gnu8/8.3.0 3) ucx/1.14.0 5) ctffind/4.1.13 7) chimera/1.14 9) relion/4.0.1-cpu 2) libfabric/1.13.1 4) openmpi3/3.1.6 6) resmap/1.1.4 8) topaz/0.2.5 (<GROUP>)[user@ui03 test]$ echo $RELION_QSUB_TEMPLATE /hpc/share/applications/gnu8/openmpi3/relion/4.0.1/cpu/bin/sbatch.sh (<GROUP>)[user@ui03 test]$ relion

We assume that the directory

/hpc/group/<GROUP>/<USER>/relion/test

contains an example case to test how Relion works.

The file

/hpc/share/applications/gnu8/openmpi3/relion/4.0.1/cpu/bin/sbatch.sh

is the script to submit the job to the SLURM queue manager.

GPU processing

If you intend to launch a processing that uses the GPU acceleration, in

RELION/2D classification/ComputeRELION/3D initial model/ComputeRELION/3D classification/ComputeRELION/3D auto refine/ComputeRELION/3D multi-body/Compute

set

| Use GPU acceleration? | Yes |

| Which GPUs to use: | $GPUID |

Set the various parameters in

RELION/2D classification/RunningRELION/3D initial model/RunningRELION/3D classification/RunningRELION/3D auto refine/RunningRELION/3D multi-body/Running

In particular:

| Submit to queue? | Yes |

| Queue name: | gpu |

| Queue submit command: | sbatch |

| Total run time: | D-HH:MM:SS (estimated) |

| Charge resources used to: | <account> |

| Real memory required per node: | <quantity>G (estimated) |

| Generic consumable resources: | gpu:<type of gpu>:<quantity per node> (from 1 to 6) |

| Additional (extra5) SBATCH directives: | –nodes=<number of nodes> (optional) |

| Additional (extra6) SBATCH directives: | –ntastks-per-node=<number of tasks per node> (optional) |

| Additional (extra7) SBATCH directives: | –reservation=<reservation name> (optional)' |

| Standard submission script: | /hpc/share/applications/gnu8/openmpi3/relion/4.0.1/gpu/bin/sbatch.sh |

| Current job: | <job name> |

| Additional argumets: | <options to add to the command that will be execute> (optional) |

Submit the job with "Run!".

In the terminal window the following message appears:

Submitted batch job <SLURM_JOB_ID>

Check the status of the queues with in command (to be launched in a second terminal window):

hpc-squeue -u $USER

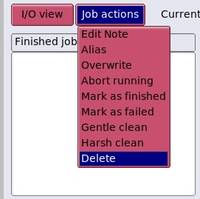

Cancel the job with "Delete":

Cancel the job in the queue (Relion does not do this automatically):

scancel <SLURM_JOB_ID>

Submit to the gpu_guest partition

To submit the job to the gpu_guest partition:

| Queue name: | gpu_guest |

| Standard submission script: | /hpc/share/applications/gnu8/openmpi3/relion/4.0.1/gpu/bin/sbatch.sh |

CPU processing

Submit to cpu partition

If you intend to launch a processing that uses the CPU, in

RELION/2D classification/ComputeRELION/3D initial model/ComputeRELION/3D classification/ComputeRELION/3D auto refine/ComputeRELION/3D multi-body/Compute

set

| Use GPU acceleration? | No |

Set the various parameters in

RELION/2D classification/RunningRELION/3D initial model/RunningRELION/3D classification/RunningRELION/3D auto refine/RunningRELION/3D multi-body/Running

In particular:

| Submit to queue? | Yes |

| Queue name: | cpu |

| Queue submit command: | sbatch |

| Total run time: | D-HH:MM:SS (estimated) |

| Charge resources used to: | <account> |

| Real memory required per node: | <quantity>G (estimated) |

| Generic consumable resources: | |

| Additional (extra5) SBATCH directives: | –nodes=<number of nodes> (optional) |

| Additional (extra6) SBATCH directives: | –ntastks-per-node=<number of tasks per node> (optional) |

| Additional (extra7) SBATCH directives: | –reservation=<reservation name> (optional) |

| Standard submission script: | /hpc/share/applications/gnu8/openmpi3/relion/4.0.1/cpu/bin/sbatch.sh |

| Current job: | <job name> |

| Additional argumets: | <options to add to the command that will be execute> (optional) |

Submit the job with "Run!".

In the terminal window the following message appears:

Submitted batch job <SLURM_JOB_ID>

Check the status of the queues with in command (to be launched in a second terminal window):

hpc-squeue -u $USER

Cancel the job with "Delete":

Cancel the job in the queue (Relion does not do this automatically):

scancel <SLURM_JOB_ID>

Submit to knl partition

To submit the work on the 'knl partition:

| Queue name: | knl |

| Standard submission script: | /hpc/share/applications/gnu8/openmpi3/relion/4.0.1/knl/bin/sbatch.sh |

The choice of parameters

The number of CPUs required is equal to the product of Number of MPI processors and Number of threads.

The following limitations must be respected otherwise the job sent in execution will remain in the queue indefinitely:

| Partition | Number of CPUs | Number of GPUs |

|---|---|---|

| gpu | >= 1 | 1-6 |

| gpu_guest | >= 1 | 1-2 |

| cpu | >= 2 | 0 |

| knl | >= 2 | 0 |

The number of allocated nodes depends on the number of CPUs required, the number of CPUs per node (depending on the type of node), the availability of free or partially occupied nodes.

The number of nodes can be specified using the SBATCH directive

–nodes

The number of tasks per node can be specified using the SBATCH directive

–ntasks-per-node

For more information on the SBATCH directives (to be launched in a second terminal window):

man sbatch

To know efficiency in the use of the required resources (to be launched after the end of the job in a second terminal window):

seff <SLURM_JOB_ID>

The use of MOTIONCOR2 with Relion

In

RELION/Motion correction/Motion

set

| Use RELION's own implementation? | No |

| MOTIONCOR2 executable: | "$RELION_MOTIONCOR2_EXECUTABLE" or "$(which MotionCor2)" |

| Which GPUs to use: | $GPUID |

When SLURM starts the job submitted with "Run!" in the "Running" tab, the "Standard submission script" loads the "relion" module which defines the environment variable RELION_MOTIONCOR2_EXECUTABLE.

Number of MPI processors must match the Number of GPUs.

The use of CTFFIND-4.1 with Relion

In

RELION/CTF estimation/CTFFIND-4.1

set

| Use CTFFIND-4.1? | Yes |

| CTFFIND-4.1 executable: | "$RELION_CTFFIND_EXECUTABLE" or "$(which ctffind)" |

When SLURM starts the job submitted with "Run!" in the "Running" tab, the "Standard submission script" loads the "relion" module which defines the environment variable RELION_CTFFIND_EXECUTABLE.

The use of Gctf with Relion

In

RELION/CTF estimation/Gctf

set

| Use Gctf instead? | Yes |

| Gctf executable: | "$RELION_GCTF_EXECUTABLE" or "$(which Gctf)" |

| Which GPUs to use: | $GPUID |

When SLURM starts the job submitted with "Run!" in the "Running" tab, the "Standard submission script" loads the "relion" module which defines the environment variable RELION_GCTF_EXECUTABLE.

The use of ResMap with Relion

In

RELION/Local resolution/ResMap

set

| Use ResMap? | Yes |

| ResMap executable: | "$RELION_RESMAP_EXECUTABLE" or "$(which ResMap)" |

When SLURM starts the job submitted with "Run!" in the "Running" tab, the "Standard submission script" loads the "relion" module which defines the environment variable RELION_RESMAP_EXECUTABLE.

ResMap

ResMap can be used independently by Relion.

CPU version 1.1.4:

module load resmap

ResMap-Latest supports the use of NVIDIA GPUs.

GPU version 1.95 (requires CUDA 8.0):

module load cuda/8.0 resmap

GPU version 1.95 (requires CUDA 9.0):

module load cuda/9.0 resmap

Chimera

UCSF Chimera can be used independently by Relion, in combination with ResMap and alone.

module load chimera