Indice

HPC Benchmark

Intel IMB

The Intel® MPI Benchmarks perform a set of MPI performance measurements for point-to-point and global communication operations for a range of message sizes. The generated benchmark data fully characterizes:

- Performance of a cluster system, including node performance, network latency, and throughput

- Efficiency of the MPI implementation used

https://software.intel.com/en-us/articles/intel-mpi-benchmarks

IBM-MPI1

Repository of the test programs: /hpc/share/samples/imb/

Memory speed test

Script IMB-n1.sh. Pingpong on a BDW node for network testing.

#!/bin/sh # Due processi su stesso host stesso socket #SBATCH --output=%x.o%j #SBATCH --partition=bdw # 1 whole node with at least 2 cores #SBATCH -N1 --tasks-per-node=2 #SBATCH --exclusive #SBATCH --account=T_HPC18A ##SBATCH --reservation corso_hpc18a_7 ## Print the list of the assigned resources echo "#SLURM_JOB_NODELIST: $SLURM_JOB_NODELIST" module load intel intelmpi CMD="mpirun /hpc/group/T_HPC18A/bin/IMB-MPI1 pingpong -off_cache -1" echo "# $CMD" eval $CMD > IMB-N1-BDW.dat

Script IMB-N1-KNL.sh Pinging on a single KNL node

#!/bin/sh # Due processi su stesso host stesso socket #SBATCH --output=%x.o%j #SBATCH --partition=knl # 1 whole node with at least 2 cores #SBATCH -N1 --tasks-per-node=2 #SBATCH --exclusive #SBATCH --account=T_HPC18A ##SBATCH --reservation corso_hpc18a_7 ## Print the list of the assigned resources echo "#SLURM_JOB_NODELIST: $SLURM_JOB_NODELIST" module load intel intelmpi CMD="mpirun /hpc/group/T_HPC18A/bin/IMB-MPI1.knl pingpong -off_cache -1" echo "# $CMD" eval $CMD > IMB-N1-KNL.dat

Network speed test

Script IMB-N2.sh. Pingpong between two processes on different BDW nodes.

#!/bin/sh # Due processi su stesso host stesso socket #SBATCH --output=%x.o%j #SBATCH --partition=bdw # 2 nodes with 1 core per node #SBATCH -N2 --tasks-per-node=1 ##SBATCH --exclusive #SBATCH --account=T_HPC18A ##SBATCH --reservation corso_hpc18a_7 ## Print the list of the assigned resources echo "#SLURM_JOB_NODELIST: $SLURM_JOB_NODELIST" module load intel intelmpi mpirun hostname CMD="mpirun /hpc/group/T_HPC18A/bin/IMB-MPI1 pingpong -off_cache -1" echo "# $CMD" eval $CMD > IMB-N2-opa.dat export I_MPI_FABRICS=shm:tcp CMD="mpirun /hpc/group/T_HPC18A/bin/IMB-MPI1 pingpong -off_cache -1" echo "# $CMD" eval $CMD > IMB-N2-tcp.dat

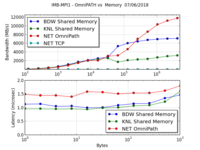

Results 2018 (Intel compiler):

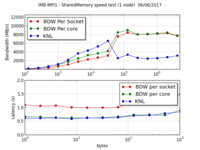

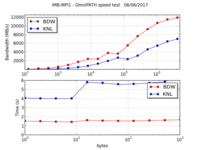

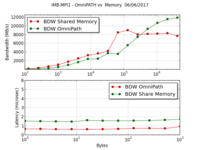

Results 2017 (Intel compiler):

mpi_latency, mpi_bandwidth

module load intel intelmpi #module load gnu openmpi cp /hpc/share/samples/mpi/mpi_latency.c . cp /hpc/share/samples/mpi/mpi_bandwidth.c . mpicc mpi_latency.c -o mpi_latency mpicc mpi_bandwidth.c -o mpi_bandwidth

Script mpi_lat_band.slurm :

#!/bin/sh #SBATCH --output=%x.o%j #SBATCH --partition=bdw ### 2 nodi (OPA o TCP) #SBATCH -N2 --tasks-per-node=1 ### 1 nodo (SHM) ##SBATCH -N1 --tasks-per-node=2 #SBATCH --exclusive #SBATCH --account=T_2018_HPCCALCPAR ##SBATCH --reservation hpcprogpar_20190517 ## Print the list of the assigned resources echo "#SLURM_JOB_NODELIST: $SLURM_JOB_NODELIST" module load intel intelmpi mpirun mpi_latency > mpi_latency_OPA.dat mpirun mpi_bandwidth > mpi_bandwidth_OPA.dat export I_MPI_FABRICS=shm:tcp mpirun mpi_latency > mpi_latency_TCP.dat mpirun mpi_bandwidth > mpi_bandwidth_TCP.dat

Results 2019 (INTEL compiler):

| Latency (micros.) | Bandwidth (MB/s) | ||||

|---|---|---|---|---|---|

| SHM | OPA | TCP | SHM | OPA | TCP |

| 3 | 3 | 86 | 7170 | 6600 | 117 |

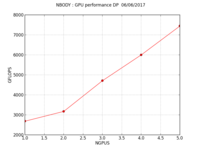

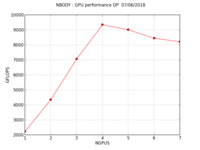

NBODY

Double precision performance using from 1 to 7 GPUs with the NBODY CUDA sample.

#!/bin/sh #SBATCH --partition=gpu #SBATCH -N1 -n1 --gres=gpu:tesla:7 #SBATCH --time 0-00:30:00 module load cuda echo "#SLURM_JOB_NODELIST : $SLURM_JOB_NODELIST" echo "#CUDA_VISIBLE_DEVICES : $CUDA_VISIBLE_DEVICES" for N in 1 2 3 4 5 6 7 do CMD="/hpc/share/tools/cuda/samples/5_Simulations/nbody/nbody -benchmark -numbodies=1024000 -fp64 -numdevices=$N" echo "# $CMD" eval $CMD >> nbody_scaling_dp.dat done

Results:

HPL

Configurations

HowTo: http://www.crc.nd.edu/~rich/CRC_Summer_Scholars_2014/HPL-HowTo.pdf

File automatic configuration HPL.dat: http://www.advancedclustering.com/act_kb/tune-hpl-dat-file/

Repository of some configurations for the HPC cluster: /hpc/share/tools/hpl/hpl-2.2/HPL.dat/

Executables

BDW

cd /hpc/share/tools/hpl/hpl-2.2

Main Make.bdw_intel settings:

ARCH = bdw_intel TOPdir = /hpc/share/tools/hpl/hpl-2.2 MPdir = /hpc/share/tools/intel/compilers_and_libraries_2017.2.174/linux/mpi/intel64 MPinc = -I$(MPdir)/include LAdir = /hpc/share/tools/intel/compilers_and_libraries_2017.2.174/linux/mkl/lib/intel64 LAinc = -I/hpc/share/tools/intel/compilers_and_libraries_2017.2.174/linux/mkl/include LAlib = -Wl,--start-group $(LAdir)/libmkl_intel_lp64.a $(LAdir)/libmkl_intel_thread.a $(LAdir)/libmkl_core.a -Wl,--end-group -lpthread HPL_OPTS = -DHPL_CALL_CBLAS CC = icc CCFLAGS = $(HPL_DEFS) -xMIC-AVX512 -fomit-frame-pointer -O3 -funroll-loops -qopenmp LINKER = mpiicc

module load intel intelmpi make arch=knl_intel clean make arch=knl_intel

Executable: /hpc/share/tools/hpl/hpl-2.2/bin/knl_intel/

KNL

cd /hpc/share/tools/hpl/hpl-2.2

Main Make.knl_intel settings:

ARCH = knl_intel TOPdir = /hpc/share/tools/hpl/hpl-2.2 MPdir = /hpc/share/tools/intel/compilers_and_libraries_2017.2.174/linux/mpi/intel64 MPinc = -I$(MPdir)/include MPlib = $(MPdir)/lib64/libmpi_mt.a LAdir = /hpc/share/tools/intel/compilers_and_libraries_2017.2.174/linux/mkl/lib/intel64 LAinc = -I/hpc/share/tools/intel/compilers_and_libraries_2017.2.174/linux/mkl/include LAlib = -Wl,--start-group $(LAdir)/libmkl_intel_lp64.a $(LAdir)/libmkl_intel_thread.a $(LAdir)/libmkl_core.a -Wl,--end-group -lpthread HPL_OPTS = -DHPL_CALL_CBLAS CC = icc CCFLAGS = $(HPL_DEFS) -xCORE-AVX2 -fomit-frame-pointer -O3 -funroll-loops -qopenmp LINKER = mpiicc

module load intel intelmpi make arch=bdw_intel clean make arch=bdw_intel

Executable:

/hpc/share/tools/hpl/hpl-2.2/bin/knl_intel/

Run BDW

#!/bin/bash #< Job Name #SBATCH --job-name="HPL-BDW_intel_8node" #< Project code #SBATCH -N1 -n32 --ntask=32 --cpus-per-task=1 --exclusive cd $SLURM_SUBMIT_DIR echo SLURM_JOB_NODEFILE=$SLURM_JOB_NODELIST cat $SLURM_JOB_NODELIST module load intel intelmpi XHPL=/hpc/share/tools/hpl/hpl-2.2/bin/bdw_intel/xhpl #< nodes = 8 - core = 32 - mem = 128GB NBs = 192 cp /hpc/share/hpl-2.2/HPL.dat/HPL.8n.32c.128m.192.dat HPL.dat CMD="mpirun -psm2 -env I_MPI_DEBUG=6 -env I_MPI_HYDRA_DEBUG=on -env MKL_NUM_THREADS=$OMP_NUM_THREADS $XHPL" echo $CMD eval $CMD

Stdout

Run KNL

HPL.4n.68c.192m.192.dat

| N | free -h | result |

|---|---|---|

| 283776 | 163 GB | core |

| 141888 | 48 GB | core |

| 120382 | 38 GB | ok |

| 91392 | 24 GB | OK |

HPL.1n.68c.192m.192.dat

| node | N | free -h | result |

|---|---|---|---|

| wn51 | 147840 | 176 GB | OK |

| wn52 | 147840 | 176 GB | OK |

| wn53 | 147840 | 176 GB | OK |

| wn54 | 147840 | 176 GB | OK |

#!/bin/bash #< Job Name #SBATCH --job-name="HPL-KNL_intel_4node" #< Project code #SBATCH -N4 -n270 --ntask=68 --cpus-per-task=1 -p knl --exclusive cd $SLURM_SUBMIT_DIR echo SLURM_JOB_NODEFILE=$SLURM_JOB_NODEFILE cat $SLURM_JOB_NODEFILE module load intel intelmpi XHPL=/hpc/share/tools/hpl/hpl-2.2/bin/knl_intel/xhpl cp /hpc/share/tools/hpl/hpl-2.2/HPL.dat/HPL.4n.68c.190m.192.dat HPL.dat #CMD="mpirun hostname" CMD="mpirun -psm2 -env I_MPI_DEBUG=6 -env I_MPI_HYDRA_DEBUG=on -env MKL_NUM_THREADS=$OMP_NUM_THREADS $XHPL" echo $CMD; eval $CMD

Stdout

================================================================================ T/V N NB P Q Time Gflops -------------------------------------------------------------------------------- WR00L2L2 91392 336 8 34 118.20 4.305e+03 HPL_pdgesv() start time Tue Jun 6 13:38:59 2017 HPL_pdgesv() end time Tue Jun 6 13:40:57 2017 -------------------------------------------------------------------------------- ||Ax-b||_oo/(eps*(||A||_oo*||x||_oo+||b||_oo)*N)= 0.0009457 ...... PASSED ================================================================================